前言

又是很久很久很久很久......没写博客了,没留意服务器都已经过期没续费,差点服务器资源就要被释放了,幸亏昨天翻了下邮箱,要不然博客就没了,万幸...... 把博客服务器续费了一段时间,然后就想着写点东西,所以这篇博文就出来了

把博客服务器续费了一段时间,然后就想着写点东西,所以这篇博文就出来了 。

。

开始

前两天看见微博热搜“当家主母电视剧里有虐杀小动物嫌疑”,首先之前我知道有这个电视剧存在,也就看了有那么几十秒钟吧 ,毕竟这种电视剧不合我的胃口。再者表明下态度,虐杀小动物是绝对不可容忍的丑陋行为。最后我想做个事情,在我没看过这个电视剧的前提下,找到真正的主角是谁,怎么找呢,talk is cheap, show me the code !

,毕竟这种电视剧不合我的胃口。再者表明下态度,虐杀小动物是绝对不可容忍的丑陋行为。最后我想做个事情,在我没看过这个电视剧的前提下,找到真正的主角是谁,怎么找呢,talk is cheap, show me the code !

1.数据准备

我这里先写个爬虫把《当家主母》35集的分集剧情介绍爬下来,存到txt文件里。

import requests

from lxml import etree

headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_2) AppleWebKit/537.36

(KHTML, like Gecko) Chrome/63.0.3239.84 Safari/537.36"}

url = "https://www.tvmao.com/drama/XGJiaHIl/episode/0-1"

domain = "https://www.tvmao.com"

response = requests.get(url=url, headers=headers)

# print(response.content.decode())

html = etree.HTML(response.content.decode())

with open("djzm.txt", "w", encoding='utf-8') as f:

for li in html.xpath('//div[contains(@class,"epipage")]/ul/li'):

# print(li.xpath('./a/@href')[0])

page_url = li.xpath('./a/@href')[0]

page_url = domain + page_url

print(page_url)

page_response = requests.get(url=page_url, headers=headers)

page_html = etree.HTML(page_response.content.decode())

article = page_html.xpath("//article/p/text()")

for a in article:

# print(a)

f.write(a)

2.词云

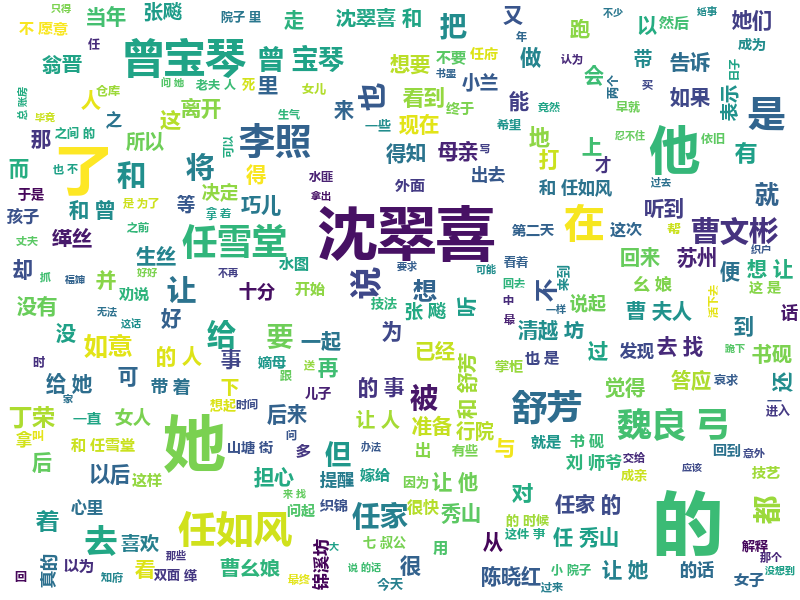

搞个词云,看下人物在剧情中出现的次数(权重)

import matplotlib as mpl

mpl.use("Agg")

import matplotlib.pyplot as plt # 数学绘图库

import jieba # 分词库

from wordcloud import WordCloud # 词云库

filename = "./djzm.txt"

txt = open(filename, "r", encoding="utf8", errors="ignore").read()

words = jieba.cut(txt)

result = "/".join(words) # 只有用"/"或" "等分割开的,才能用于构建词云图

# 常用的非人名的字词

no_name = ["说道", "出来", "如何", "跟着", "之后", "当真", "说话", "这里", "爹爹", "可是", "起来", "你们", "身上","两人","还是","这些","不会","自己","内力","怎么","南海","身子","脸上","原来","这么","弟子","众人", "这个","便是","倘若","突然","只是","不敢","他们","我们","见到","声音","心想","如此","只见","之中","不能","一个", "知道", "什么", "武功", "不是", "甚么", "一声", "咱们", "师父", "心中", "不知"]

# wordcloud配置

wc = WordCloud(

font_path="C:\Windows\Fonts\msyhbd.ttc", # 设置字体

background_color='white', # 背景颜色

width=800, height=600, # 设置宽高

max_font_size=70, min_font_size=10, # 字体最大/最小值

stopwords=no_name, # 设置停用词,不在词云图中显示

max_words=2000, # 设置最多显示的字数

mode='RGBA'

)

wc.generate(result)

# 保存图片的名字

img_name = filename[:filename.rfind("."):] + "_词云图" + ".png"

# 生成图片

wc.to_file(img_name)

# 4、显示图片

plt.figure("词云图") # 指定所绘图名称

plt.imshow(wc) # 以图片的形式显示词云

plt.axis("off") # 关闭图像坐标系

plt.show()出图如下:

看这个词云图嘛,“盲猜”沈翠喜,曾宝琴,任雪堂,任如风等像是主角,毕竟出场次数很多。

3.人物社交网络关系

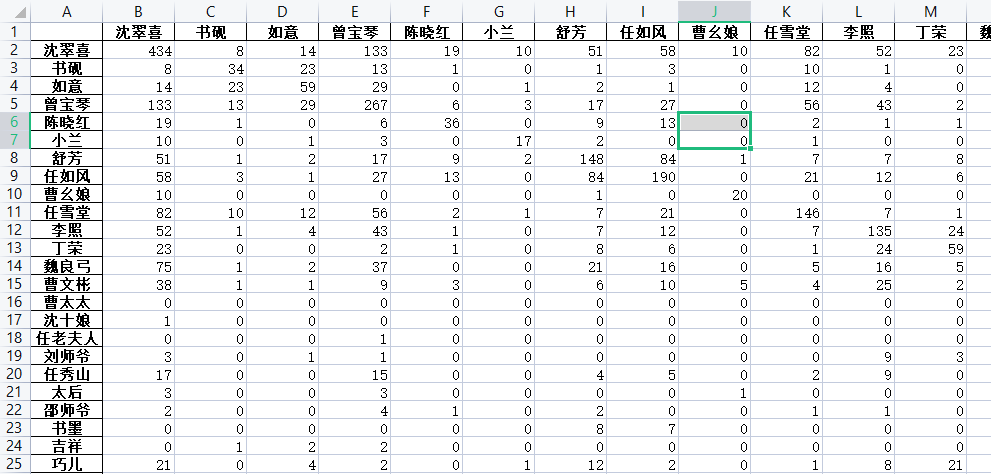

利用gephi生成人物的社交网络关系图谱,看下各人物的关联度。先生成gephi所需的共现矩阵数据,

import pandas as pd

# 社交网络图 共现矩阵

# 两个人物出现在同一段,说明有某种关系

words = open('./djzm.txt', 'r', encoding='utf-8').read().replace('\n', '').split('。')

words = pd.DataFrame(words, columns=['text'])

nameall = ['沈翠喜', '书砚', '如意', '曾宝琴', '陈晓红', '小兰', '舒芳', '任如风', '曹幺娘', '任雪堂', '李照', '丁荣', '魏良弓', '曹文彬', '曹太太',

'沈十娘','任老夫人','刘师爷','任秀山','太后','邵师爷','书墨','吉祥','巧儿','福婶','任福','吴巡抚','翁晋']

nameall = pd.DataFrame(nameall,columns = ['name'])

words['wordnum'] = words.text.apply(lambda x: len(x.strip()))

wrods = words.reset_index(drop=True)

relationmat = pd.DataFrame(index=nameall.name.tolist(), columns=nameall.name.tolist()).fillna(0)

wordss = words.text.tolist()

for k in range(len(wordss)):

for i in nameall.name.tolist():

for j in nameall.name.tolist():

if i in wordss[k] and j in wordss[k]:

relationmat.loc[i, j] += 1

if k % 1000 == 0:

print(k)

relationmat.to_excel('共现矩阵.xlsx')生成的数据长这样:

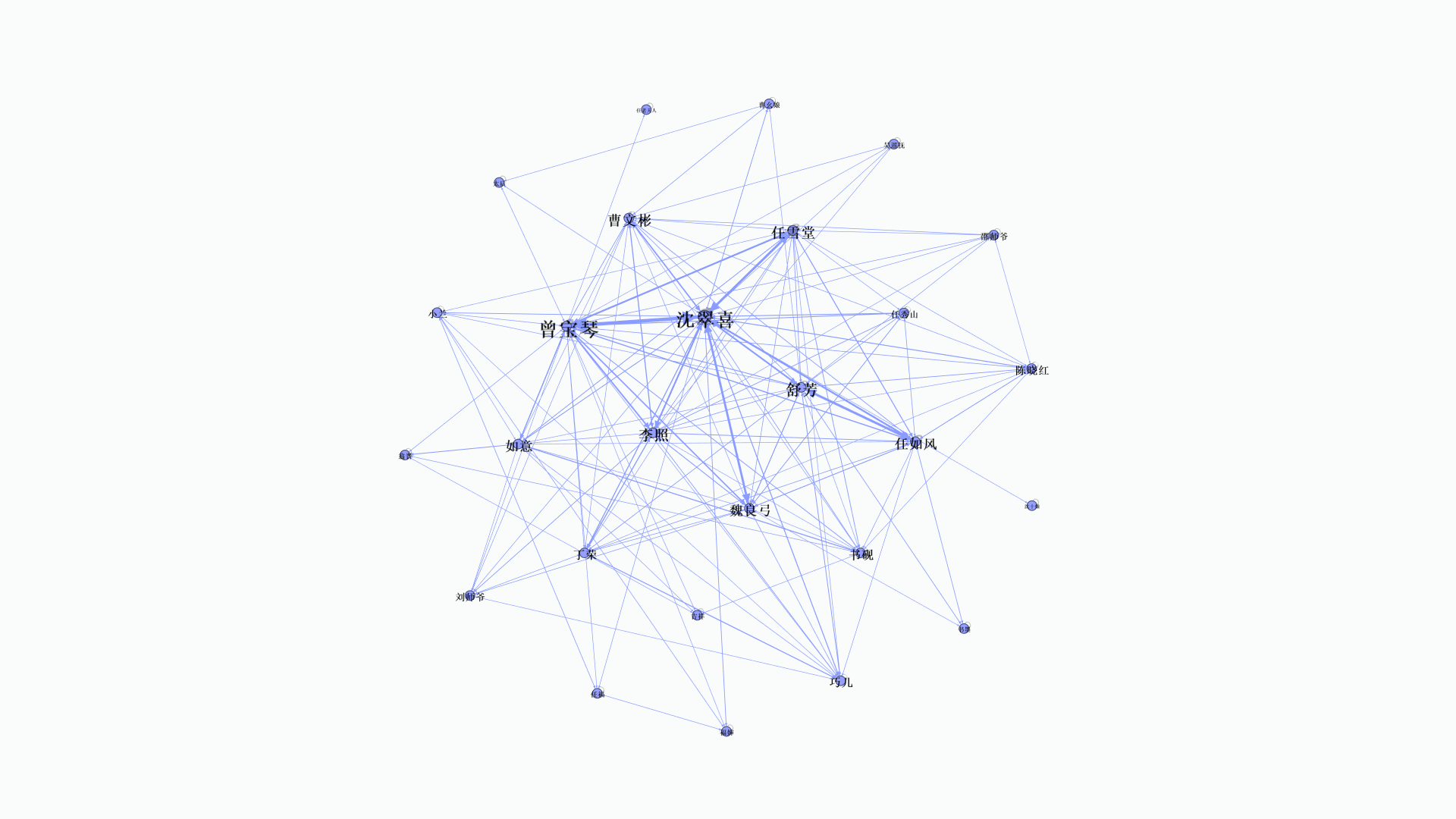

然后用gephi新建一个工程,导入上面的数据,简单设置下,得图如下:

简单看下,沈翠喜,曾宝琴,李照,魏良弓等这些人物和其他人物交集更多。

Ending

综上,沈翠喜应该是《当家主母》第一主角吧!

源代码以及相关资源文件都在这:https://gitee.com/shiyilin/PythonSpiders/tree/master/djzm