一. 数据准备

在英雄联盟宇宙官网可以找到所有的英雄及其背景故事,写个小爬虫把数据抓下来,都在代码,就不过多介绍了。 这里会生成一个csv文件,就是gephi要用到的数据。

这里会生成一个csv文件,就是gephi要用到的数据。

import requests

import csv

import json

class Spider:

def __init__(self):

self.champion_url = 'https://yz.lol.qq.com/v1/zh_cn/champions/{}/index.json'

self.champions = list()

self.champions_related = list()

self.champions_related_repeat = list()

def get_champion(self):

url = 'https://yz.lol.qq.com/v1/zh_cn/champion-browse/index.json'

resp = requests.get(url)

resp_data = json.loads(resp.content.decode())

for item in resp_data['champions']:

self.champions.append({'name': item['name'], 'slug': item['slug']})

def get_champion_item(self):

for champion in self.champions:

champion_url = self.champion_url.format(champion['slug'])

resp = requests.get(champion_url)

resp_data = json.loads(resp.content.decode())

name = champion['name']

for related in resp_data['related-champions']:

tmp_left = name+related['name']

tmp_right = related['name']+name

if tmp_left not in self.champions_related_repeat and tmp_right not in self.champions_related_repeat:

self.champions_related.append([name, related['name']])

self.champions_related_repeat.append(tmp_left)

self.champions_related_repeat.append(tmp_left)

def save_item(self):

headers = ['Source','Target']

with open('lol_edge.csv','w',newline='') as f:

f_csv = csv.writer(f)

f_csv.writerow(headers)

f_csv.writerows(self.champions_related)

def run(self):

self.get_champion()

self.get_champion_item()

self.save_item()

if __name__ == "__main__":

spider = Spider()

spider.run()二. gephi

Gephi是一款开源免费跨平台基于JVM的复杂网络分析软件,,其主要用于各种网络和复杂系统,动态和分层图的交互可视化与探测开源工具。

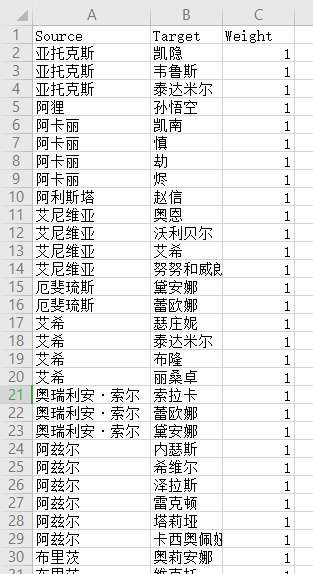

上面生成的csv文件:

这个就是gephi要用到的“边”数据,具体gephi使用,可以看 Python数据可视化——Gephi关系网络图。

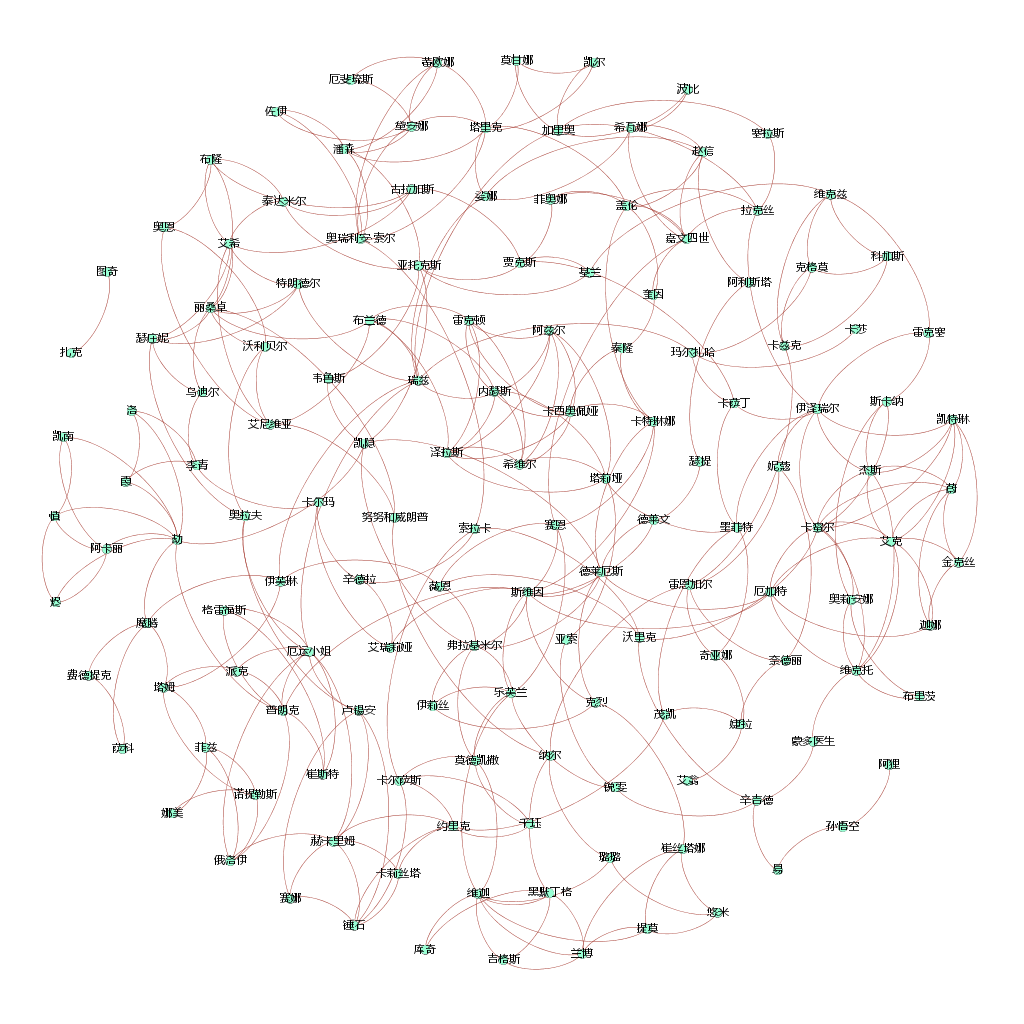

然后数据导入后:

生成的网络图就是这样的:

看着还是很花哨的 ,是不

,是不